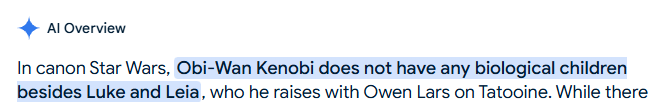

Every time I do a Google search, the very first response is an "AI overview", which according to Google is designed to all the hard work for me. "Hard word" is apparently what people consider they are doing when they are forced to type a couple of words and then scan a list of results before deciding to read any. Sounds exhausting, I know.

The AI overview instead provides a distillation of numerous results, so you don't have to decide what to believe. You just place your faith in Google, and they do all the thinking for you. What a relief!

Except...at the bottom of every AI overview, there is that niggling little warning in small letters: "AI overviews may contain mistakes".

So...Google is saying "Leave it to us. We'll do the work and the research, and all you have to do is slurp it up out of the trough and carry on!"...but they also are saying, rather quietly "You can't trust a thing you read here, but it's not our fault!"

Try Googling something with which you are extremely familiar. Read the AI overview. You will be astonished to see not only simple mistakes, but glaring inaccuracies which can, at best, lead you down endless dead ends and wrong paths. At worst, they can trick you into doing things that can be downright dangerous. And if you listen to them, and wind up getting a boo-boo or breaking something expensive, you can't come back at them because they'll just say "Hey...you can't trust what we say...and we told you that up front!"

The really sad thing is seeing how many people come onto a discussion site like MFK or others, read a question posted by someone else, and then immediately dive into their computer to come up with an answer for that person. ChatGPT (correct letters?...don't know, don't really care...) seems to be a popular one right now. We have people post on question threads with answers that they freely admit come from that source.

Why? Don't you think that the OP asking the question was capable of looking on the internet at ChatGPT or Google or whatever else? Does it not seem likely that they are looking for a human being to answer their question, preferably from personal experience with the subject matter? How does regurgitating this crap for them assist them in any way?

Not too long ago, such posts were often prefaced with comments like "To my knowledge..." or "As far as I know..." or other phrases which could usually be interpreted as "I don't have a clue, but here's what other people who don't have a clue are saying". I'm not seeing that as much anymore, just lots of admissions that "ChatGPT is the source of this nonsense so don't blame me if it's 100% BS".

Anybody seen the Rick and Morty episode where Rick conjures up Mr. Meeseeks? Mr. Meeseeks exists only to fulfill a function that he is asked to fulfill by his conjuror. After that he ceases to exist, which is what he most fervently wants.

The only difference is...the ChatGPT-ers or Googlists or whatever we should call them...don't cease to exist after fulfilling (what they perceive to be) their function. They just scamper off to "help" someone else...

The AI overview instead provides a distillation of numerous results, so you don't have to decide what to believe. You just place your faith in Google, and they do all the thinking for you. What a relief!

Except...at the bottom of every AI overview, there is that niggling little warning in small letters: "AI overviews may contain mistakes".

So...Google is saying "Leave it to us. We'll do the work and the research, and all you have to do is slurp it up out of the trough and carry on!"...but they also are saying, rather quietly "You can't trust a thing you read here, but it's not our fault!"

Try Googling something with which you are extremely familiar. Read the AI overview. You will be astonished to see not only simple mistakes, but glaring inaccuracies which can, at best, lead you down endless dead ends and wrong paths. At worst, they can trick you into doing things that can be downright dangerous. And if you listen to them, and wind up getting a boo-boo or breaking something expensive, you can't come back at them because they'll just say "Hey...you can't trust what we say...and we told you that up front!"

The really sad thing is seeing how many people come onto a discussion site like MFK or others, read a question posted by someone else, and then immediately dive into their computer to come up with an answer for that person. ChatGPT (correct letters?...don't know, don't really care...) seems to be a popular one right now. We have people post on question threads with answers that they freely admit come from that source.

Why? Don't you think that the OP asking the question was capable of looking on the internet at ChatGPT or Google or whatever else? Does it not seem likely that they are looking for a human being to answer their question, preferably from personal experience with the subject matter? How does regurgitating this crap for them assist them in any way?

Not too long ago, such posts were often prefaced with comments like "To my knowledge..." or "As far as I know..." or other phrases which could usually be interpreted as "I don't have a clue, but here's what other people who don't have a clue are saying". I'm not seeing that as much anymore, just lots of admissions that "ChatGPT is the source of this nonsense so don't blame me if it's 100% BS".

Anybody seen the Rick and Morty episode where Rick conjures up Mr. Meeseeks? Mr. Meeseeks exists only to fulfill a function that he is asked to fulfill by his conjuror. After that he ceases to exist, which is what he most fervently wants.

The only difference is...the ChatGPT-ers or Googlists or whatever we should call them...don't cease to exist after fulfilling (what they perceive to be) their function. They just scamper off to "help" someone else...